The cybersecurity community on Reddit responded in disbelief this month when a self-described Air National Guard member with top secret security clearance began questioning the arrangement they’d made with company called DSLRoot, which was paying $250 a month to plug a pair of laptops into the Redditor’s high-speed Internet connection in the United States. This post examines the history and provenance of DSLRoot, one of the oldest “residential proxy” networks with origins in Russia and Eastern Europe.

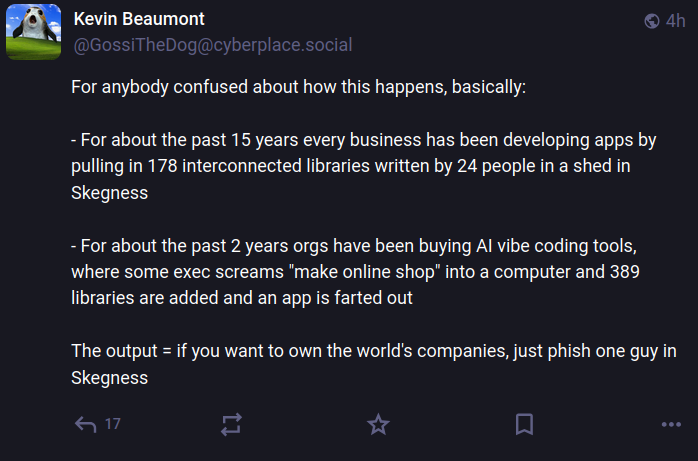

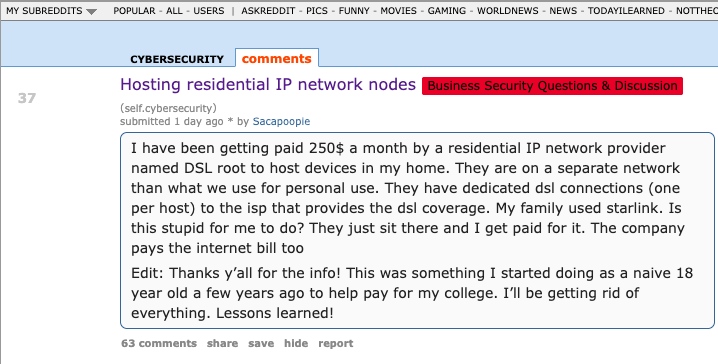

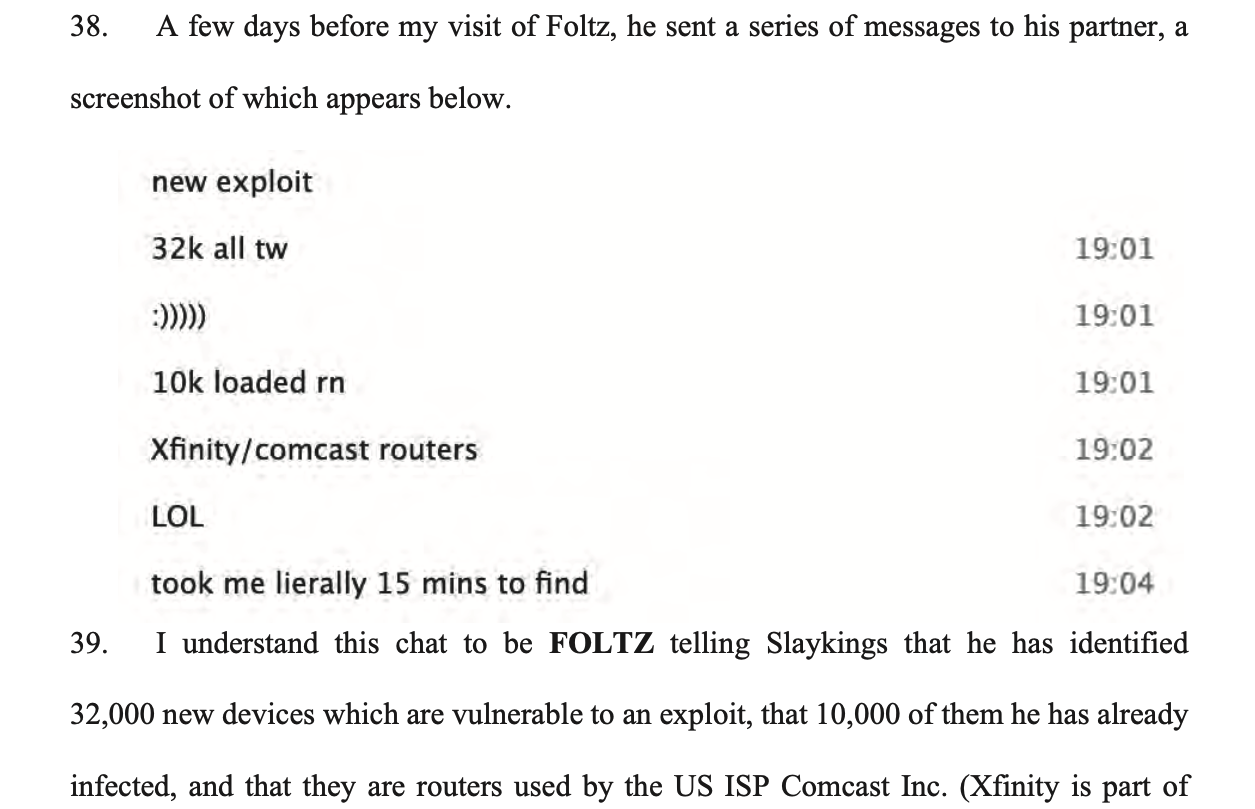

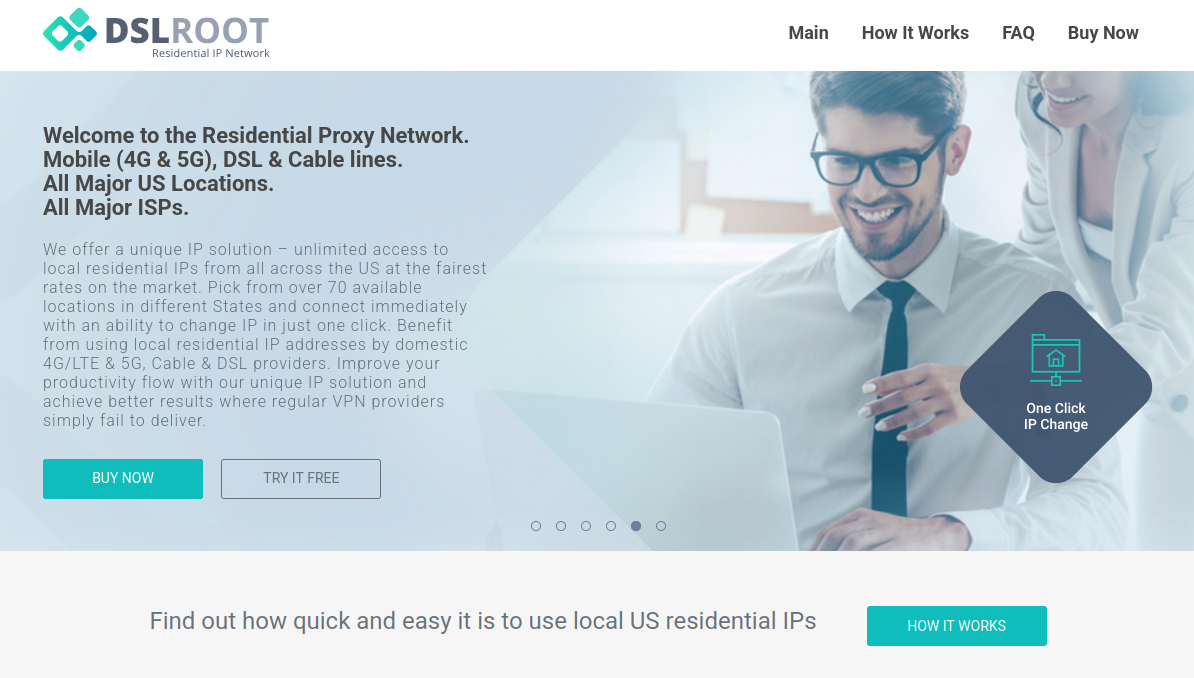

The query about DSLRoot came from a Reddit user “Sacapoopie,” who did not respond to questions. This user has since deleted the original question from their post, although some of their replies to other Reddit cybersecurity enthusiasts remain in the thread. The original post was indexed here by archive.is, and it began with a question:

“I have been getting paid 250$ a month by a residential IP network provider named DSL root to host devices in my home,” Sacapoopie wrote. “They are on a separate network than what we use for personal use. They have dedicated DSL connections (one per host) to the ISP that provides the DSL coverage. My family used Starlink. Is this stupid for me to do? They just sit there and I get paid for it. The company pays the internet bill too.”

Many Redditors said they assumed Sacapoopie’s post was a joke, and that nobody with a cybersecurity background and top-secret (TS/SCI) clearance would agree to let some shady residential proxy company introduce hardware into their network. Other readers pointed to a slew of posts from Sacapoopie in the Cybersecurity subreddit over the past two years about his work on cybersecurity for the Air National Guard.

When pressed for more details by fellow Redditors, Sacapoopie described the equipment supplied by DSLRoot as “just two laptops hardwired into a modem, which then goes to a dsl port in the wall.”

“When I open the computer, it looks like [they] have some sort of custom application that runs and spawns several cmd prompts,” the Redditor explained. All I can infer from what I see in them is they are making connections.”

When asked how they became acquainted with DSLRoot, Sacapoopie told another user they discovered the company and reached out after viewing an advertisement on a social media platform.

“This was probably 5-6 years ago,” Sacapoopie wrote. “Since then I just communicate with a technician from that company and I help trouble shoot connectivity issues when they arise.”

Reached for comment, DSLRoot said its brand has been unfairly maligned thanks to that Reddit discussion. The unsigned email said DSLRoot is fully transparent about its goals and operations, adding that it operates under full consent from its “regional agents,” the company’s term for U.S. residents like Sacapoopie.

“As although we support honest journalism, we’re against of all kinds of ‘low rank/misleading Yellow Journalism’ done for the sake of cheap hype,” DSLRoot wrote in reply. “It’s obvious to us that whoever is doing this, is either lacking a proper understanding of the subject or doing it intentionally to gain exposure by misleading those who lack proper understanding,” DSLRoot wrote in answer to questions about the company’s intentions.”

“We monitor our clients and prohibit any illegal activity associated with our residential proxies,” DSLRoot continued. “We honestly didn’t know that the guy who made the Reddit post was a military guy. Be it an African-American granny trying to pay her rent or a white kid trying to get through college, as long as they can provide an Internet line or host phones for us — we’re good.”

WHAT IS DSLROOT?

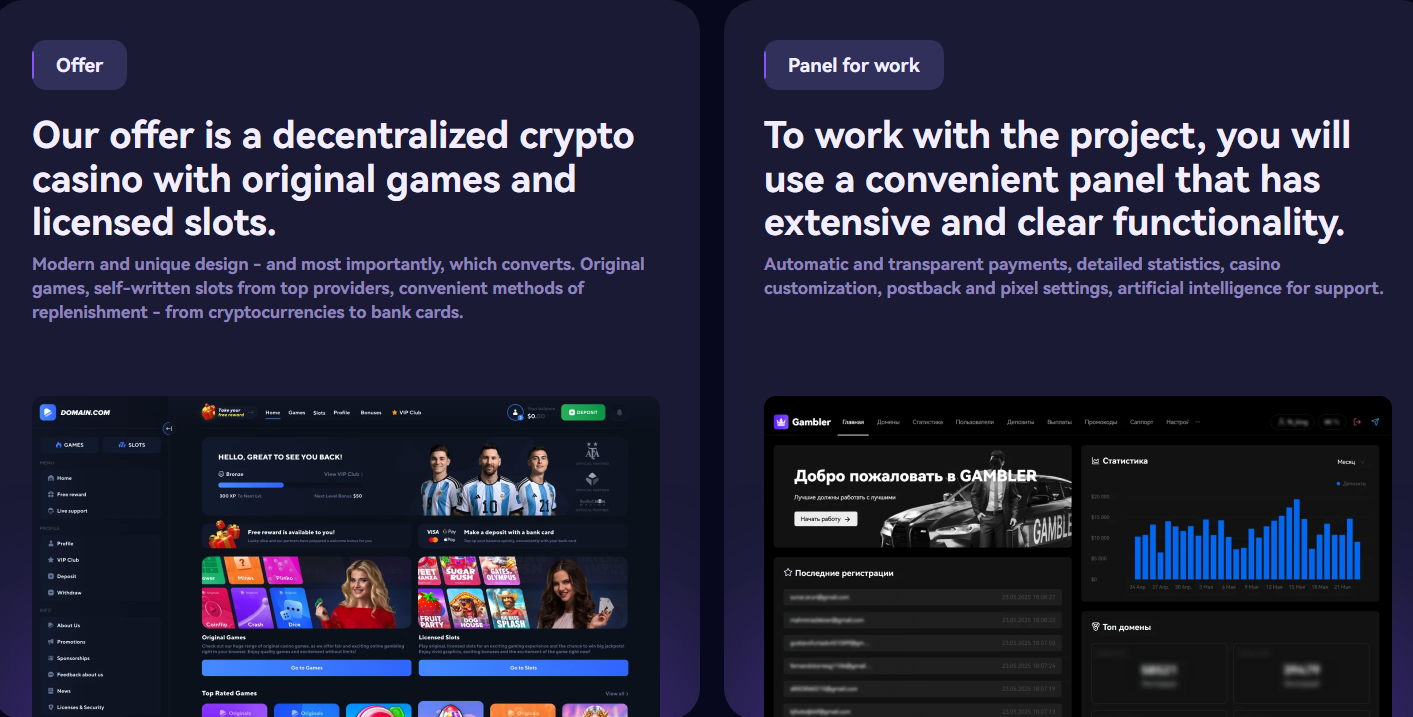

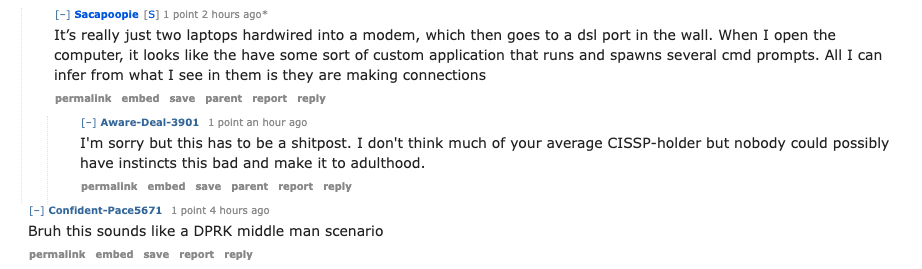

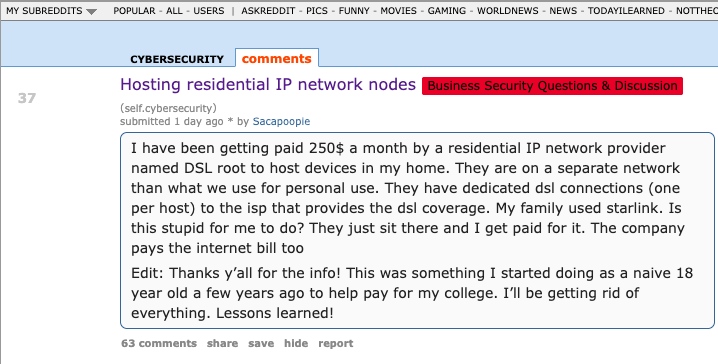

DSLRoot is sold as a residential proxy service on the forum BlackHatWorld under the name DSLRoot and GlobalSolutions. The company is based in the Bahamas and was formed in 2012. The service is advertised to people who are not in the United States but who want to seem like they are. DSLRoot pays people in the United States to run the company’s hardware and software — including 5G mobile devices — and in return it rents those IP addresses as dedicated proxies to customers anywhere in the world — priced at $190 per month for unrestricted access to all locations.

The DSLRoot website.

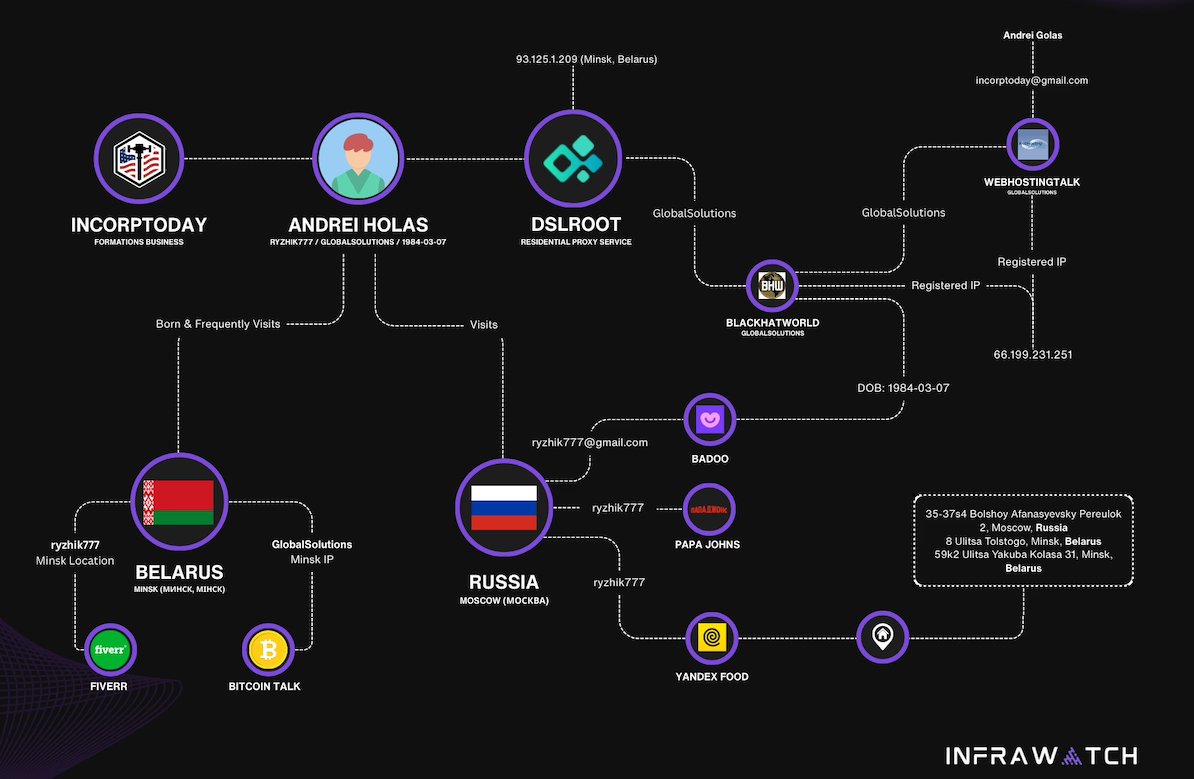

The GlobalSolutions account on BlackHatWorld lists a Telegram account and a WhatsApp number in Mexico. DSLRoot’s profile on the marketing agency digitalpoint.com from 2010 shows their previous username on the forum was “Incorptoday.” GlobalSolutions user accounts at bitcointalk[.]org and roclub[.]com include the email clickdesk@instantvirtualcreditcards[.]com.

Passive DNS records from DomainTools.com show instantvirtualcreditcards[.]com shared a host back then — 208.85.1.164 — with just a handful of domains, including dslroot[.]com, regacard[.]com, 4groot[.]com, residential-ip[.]com, 4gemperor[.]com, ip-teleport[.]com, and proxyrental[.]net.

Cyber intelligence firm Intel 471 finds GlobalSolutions registered on BlackHatWorld in 2016 using the email address prepaidsolutions@yahoo.com. This user shared that their birthday is March 7, 1984.

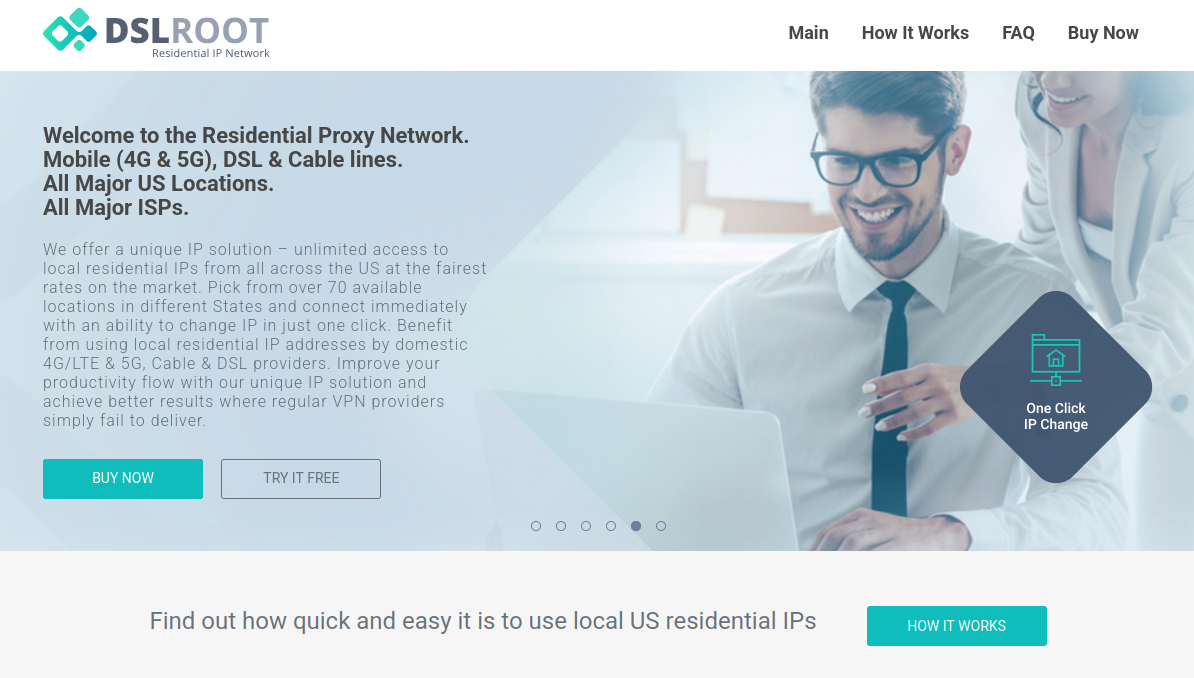

Several negative reviews about DSLRoot on the forums noted that the service was operated by a BlackHatWorld user calling himself “USProxyKing.” Indeed, Intel 471 shows this user told fellow form members in 2013 to contact him at the Skype username “dslroot.”

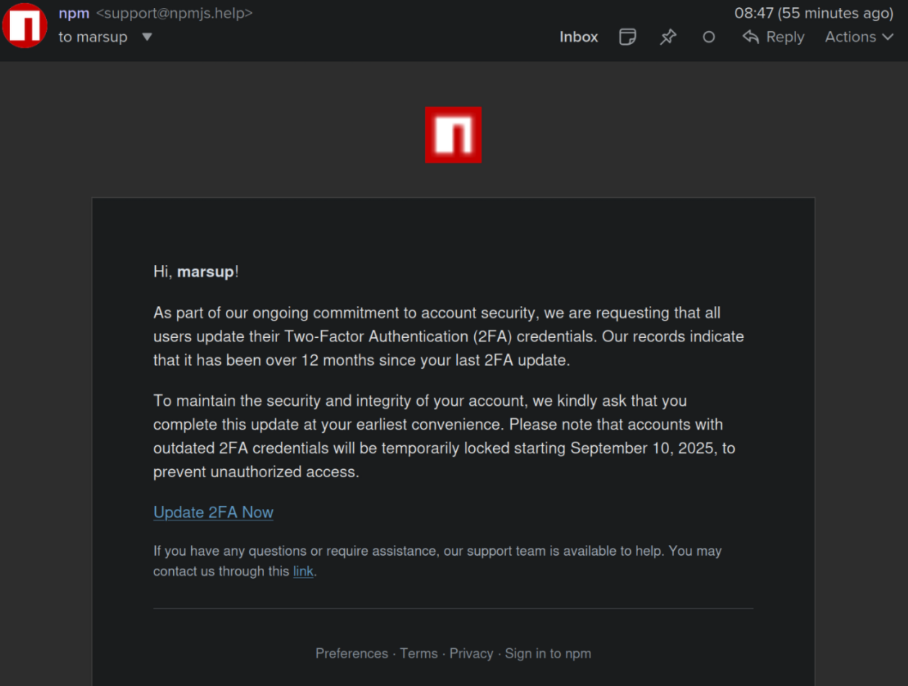

USProxyKing on BlackHatWorld, soliciting installations of his adware via torrents and file-sharing sites.

USProxyKing had a reputation for spamming the forums with ads for his residential proxy service, and he ran a “pay-per-install” program where he paid affiliates a small commission each time one of their websites resulted in the installation of his unspecified “adware” programs — presumably a program that turned host PCs into proxies. On the other end of the business, USProxyKing sold that pay-per-install access to others wishing to distribute questionable software — at $1 per installation.

Private messages indexed by Intel 471 show USProxyKing also raised money from nearly 20 different BlackHatWorld members who were promised shareholder positions in a new business that would offer robocalling services capable of placing 2,000 calls per minute.

Constella Intelligence, a platform that tracks data exposed in breaches, finds that same IP address GlobalSolutions used to register at BlackHatWorld was also used to create accounts at a handful of sites, including a GlobalSolutions user account at WebHostingTalk that supplied the email address incorptoday@gmail.com. Also registered to incorptoday@gmail.com are the domains dslbay[.]com, dslhub[.]net, localsim[.]com, rdslpro[.]com, virtualcards[.]biz/cc, and virtualvisa[.]cc.

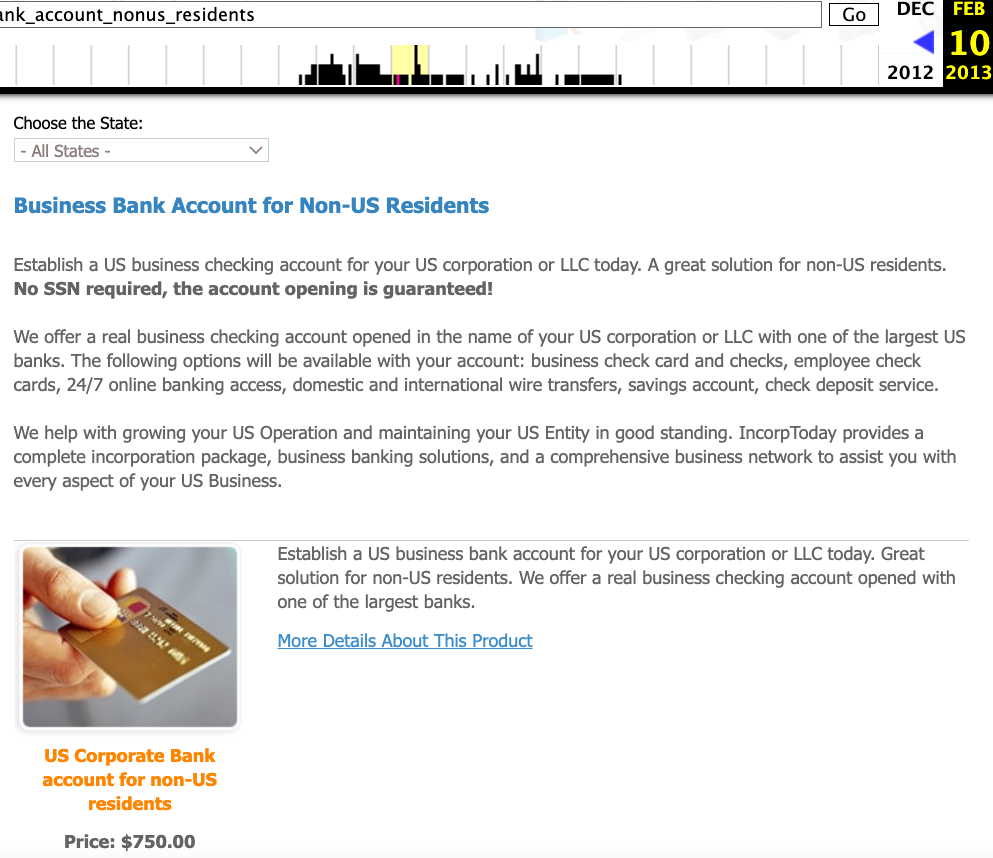

Recall that DSLRoot’s profile on digitalpoint.com was previously named Incorptoday. DomainTools says incorptoday@gmail.com is associated with almost two dozen domains going back to 2008, including incorptoday[.]com, a website that offers to incorporate businesses in several states, including Delaware, Florida and Nevada, for prices ranging from $450 to $550.

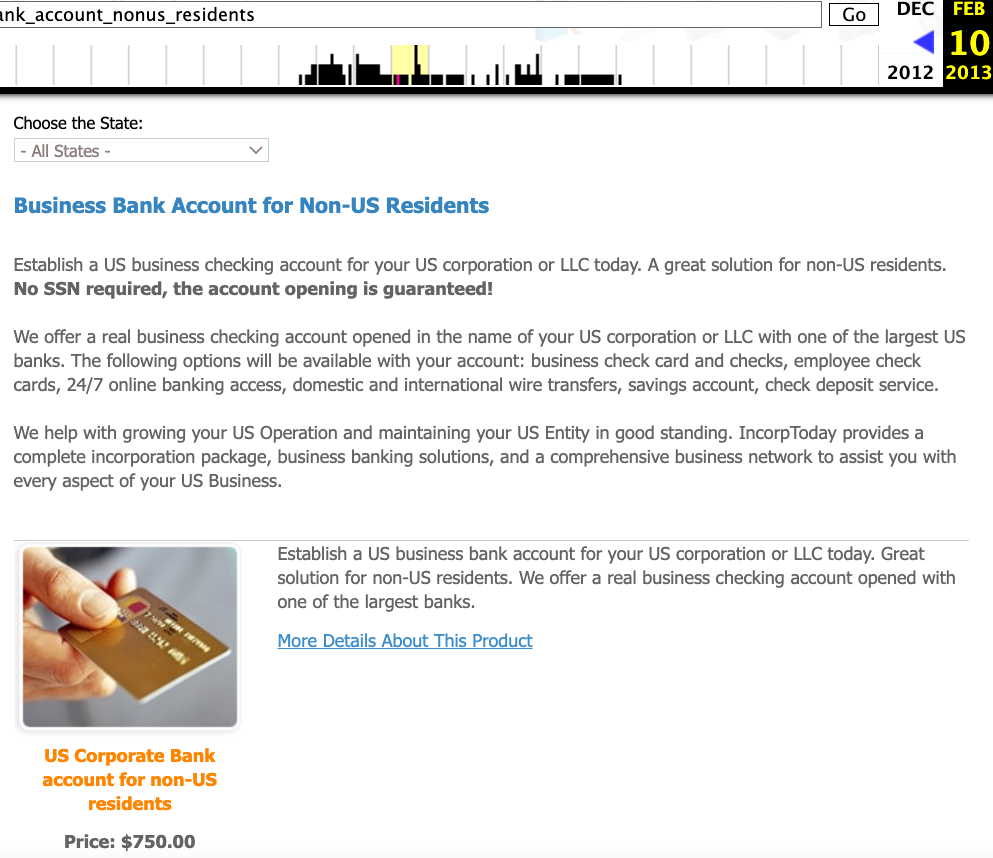

As we can see in this archived copy of the site from 2013, IncorpToday also offered a premiere service for $750 that would allow the customer’s new company to have a retail checking account, with no questions asked.

Global Solutions is able to provide access to the U.S. banking system by offering customers prepaid cards that can be loaded with a variety of virtual payment instruments that were popular in Russian-speaking countries at the time, including WebMoney. The cards are limited to $500 balances, but non-Westerners can use them to anonymously pay for goods and services at a variety of Western companies. Cardnow[.]ru, another domain registered to incorptoday@gmail.com, demonstrates this in action.

A copy of Incorptoday’s website from 2013 offers non-US residents a service to incorporate a business in Florida, Delaware or Nevada, along with a no-questions-asked checking account, for $750.

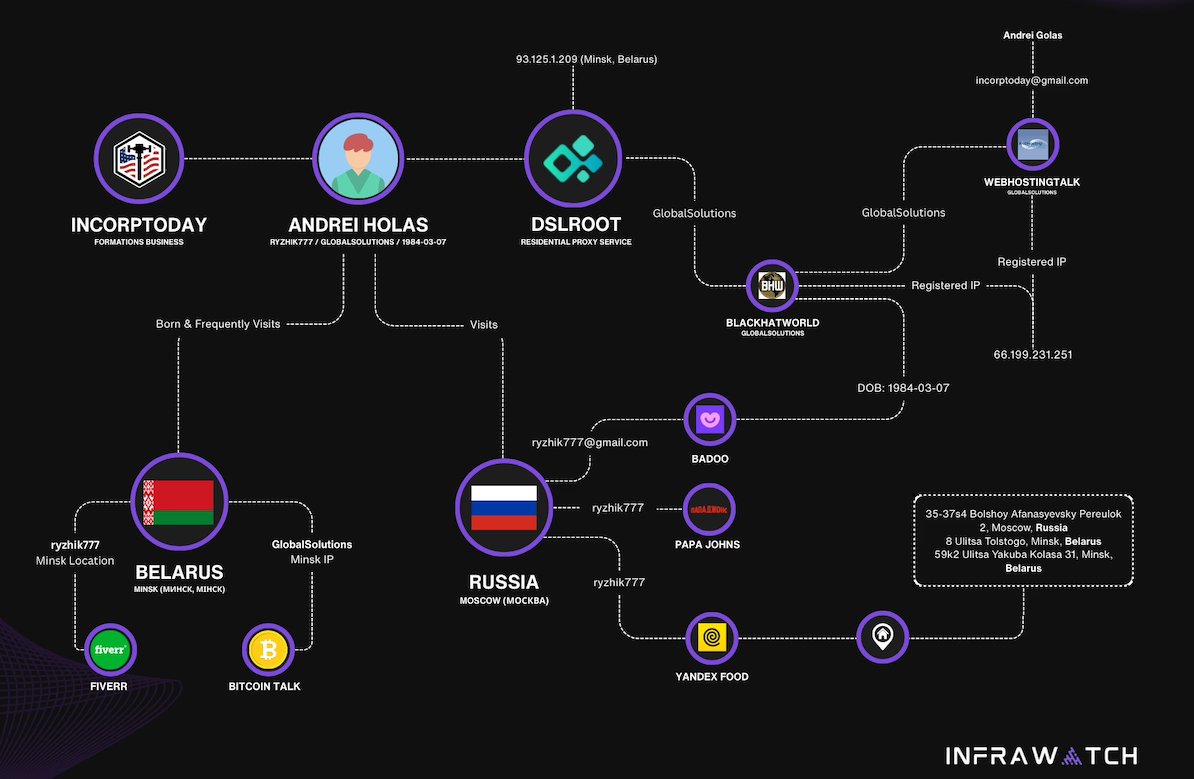

WHO IS ANDREI HOLAS?

The oldest domain (2008) registered to incorptoday@gmail.com is andrei[.]me; another is called andreigolos[.]com. DomainTools says these and other domains registered to that email address include the registrant name Andrei Holas, from Huntsville, Ala.

Public records indicate Andrei Holas has lived with his brother — Aliaksandr Holas — at two different addresses in Alabama. Those records state that Andrei Holas’ birthday is in March 1984, and that his brother is slightly younger. The younger brother did not respond to a request for comment.

Andrei Holas maintained an account on the Russian social network Vkontakte under the email address ryzhik777@gmail.com, an address that shows up in numerous records hacked and leaked from Russian government entities over the past few years.

Those records indicate Andrei Holas and his brother are from Belarus and have maintained an address in Moscow for some time (that address is roughly three blocks away from the main headquarters of the Russian FSB, the successor intelligence agency to the KGB). Hacked Russian banking records show Andrei Holas’ birthday is March 7, 1984 — the same birth date listed by GlobalSolutions on BlackHatWorld.

A 2010 post by ryzhik777@gmail.com at the Russian-language forum Ulitka explains that the poster was having trouble getting his B1/B2 visa to visit his brother in the United States, even though he’d previously been approved for two separate guest visas and a student visa. It remains unclear if one, both, or neither of the Holas brothers still lives in the United States. Andrei explained in 2010 that his brother was an American citizen.

LEGAL BOTNETS

We can all wag our fingers at military personnel who should undoubtedly know better than to install Internet hardware from strangers, but in truth there is an endless supply of U.S. residents who will resell their Internet connection if it means they can make a few bucks out of it. And these days, there are plenty of residential proxy providers who will make it worth your while.

Traditionally, residential proxy networks have been constructed using malicious software that quietly turns infected systems into traffic relays that are then sold in shadowy online forums. Most often, this malware gets bundled with popular cracked software and video files that are uploaded to file-sharing networks and that secretly turn the host device into a traffic relay. In fact, USPRoxyKing bragged that he routinely achieved thousands of installs per week via this method alone.

These days, there a number of residential proxy networks that entice users to monetize their unused bandwidth (inviting you to violate the terms of service of your ISP in the process); others, like DSLRoot, act as a communal VPN, and by using the service you gain access to the connections of other proxies (users) by default, but you also agree to share your connection with others.

Indeed, Intel 471’s archives show the GlobalSolutions and DSLRoot accounts routinely received private messages from forum users who were college students or young people who were trying to make ends meet. Those messages show that many of DSLRoot’s “regional agents” often sought commissions to refer friends interested in reselling their home Internet connections (DSLRoot would offer to cover the monthly cost of the agent’s home Internet connection).

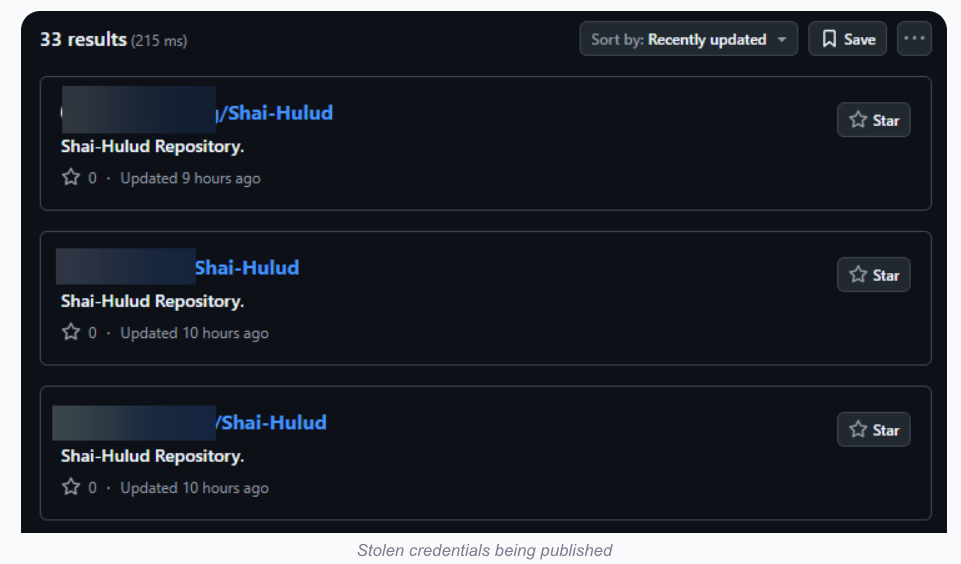

But in an era when North Korean hackers are relentlessly posing as Western IT workers by paying people to host laptop farms in the United States, letting strangers run laptops, mobile devices or any other hardware on your network seems like an awfully risky move regardless of your station in life. As several Redditors pointed out in Sacapoopie’s thread, an Arizona woman was sentenced in July 2025 to 102 months in prison for hosting a laptop farm that helped North Korean hackers secure jobs at more than 300 U.S. companies, including Fortune 500 firms.

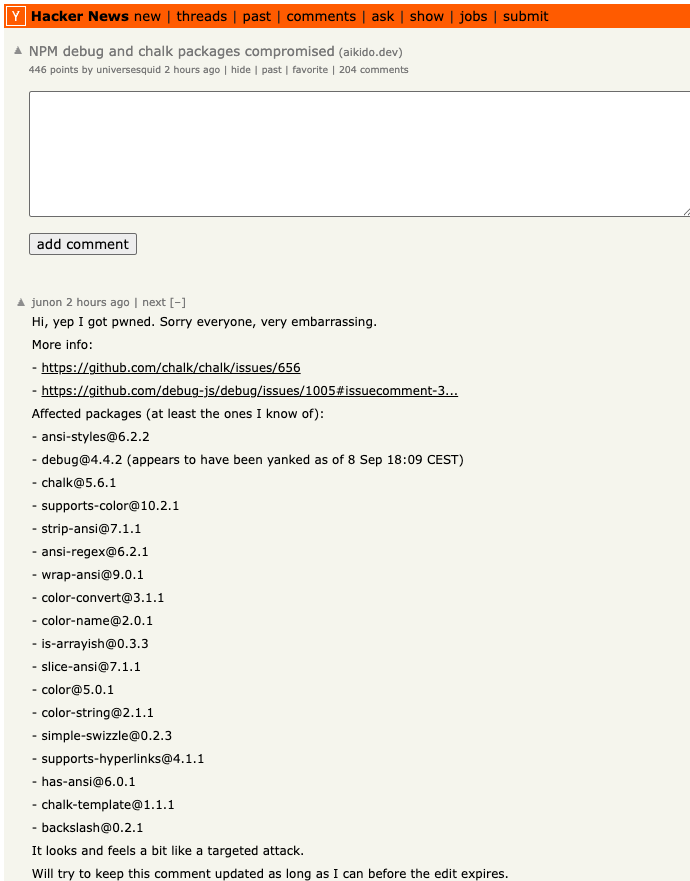

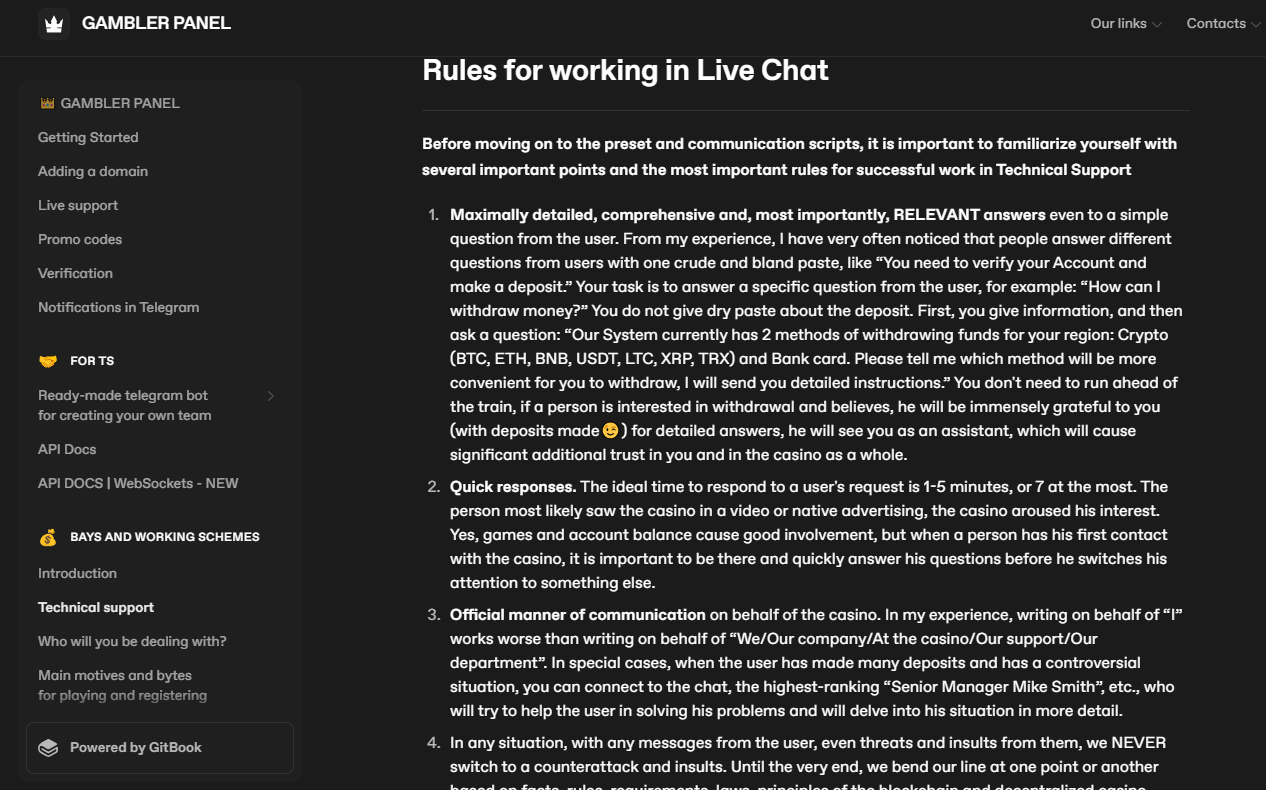

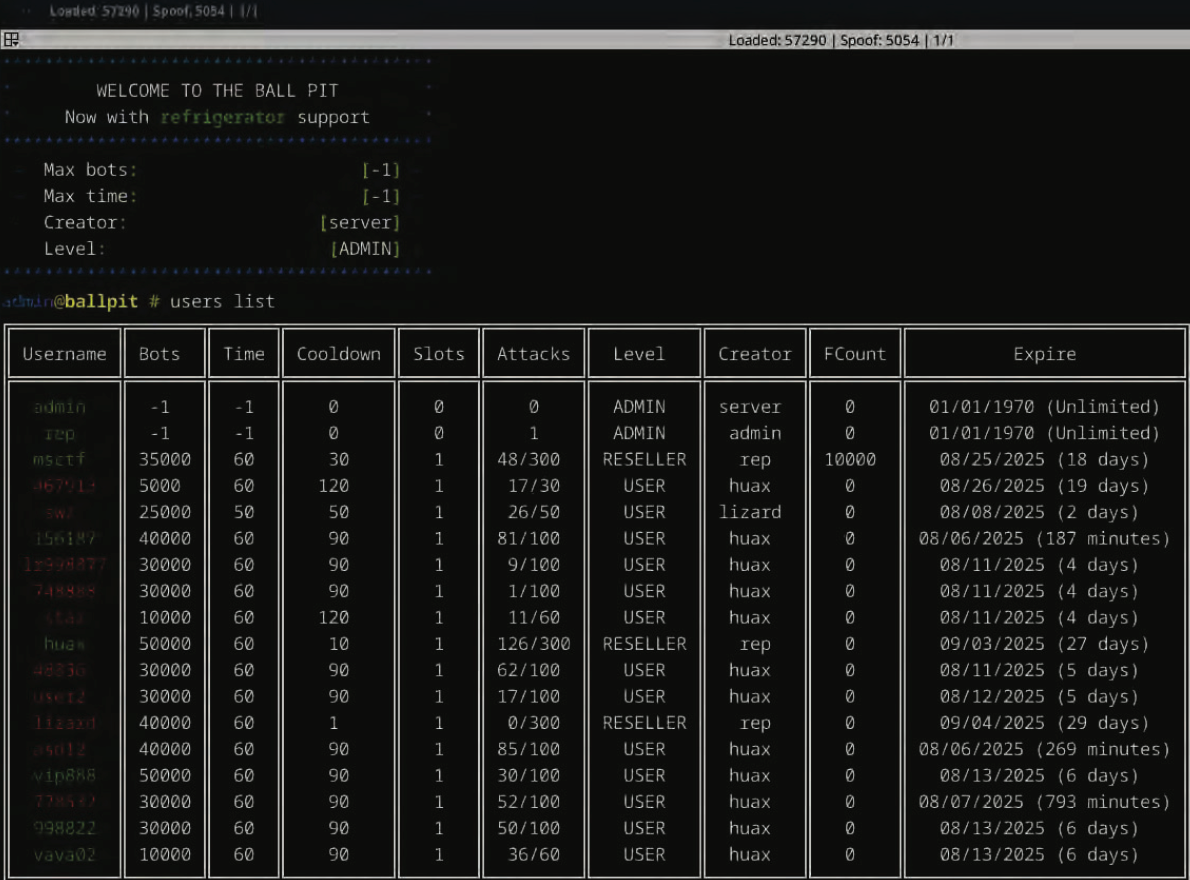

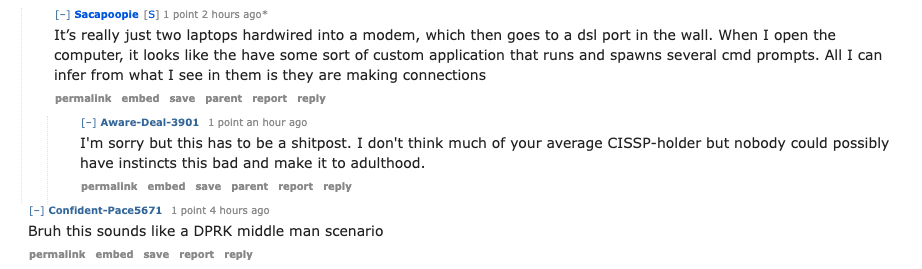

Lloyd Davies is the founder of Infrawatch, a security startup based in London. Davies said he reverse engineered the software that powers DSLRoot’s proxy service, and found it phones home to the aforementioned domain proxyrental[.]net, which sells a service that promises to “get your ads live in multiple cities without getting banned, flagged or ghosted” (presumably a reference to CraigsList ads).

Davies said he found the DSLRoot installer had capabilities to remotely control residential networking equipment across multiple vendor brands.

Image: Infrawatch.app.

“The software employs vendor-specific exploits and hardcoded administrative credentials, suggesting DSLRoot pre-configures equipment before deployment,” Davies wrote in an analysis published today. He said the software performs WiFi network enumeration to identify nearby wireless networks, thereby “potentially expanding targeting capabilities beyond the primary internet connection.”

It’s unclear exactly when the USProxyKing was usurped from his throne, but DSLRoot and its proxy offerings are not what they used to be. Davies said the entire DSLRoot network now has fewer than 300 nodes nationwide, mostly systems on DSL providers like CenturyLink and Frontier.

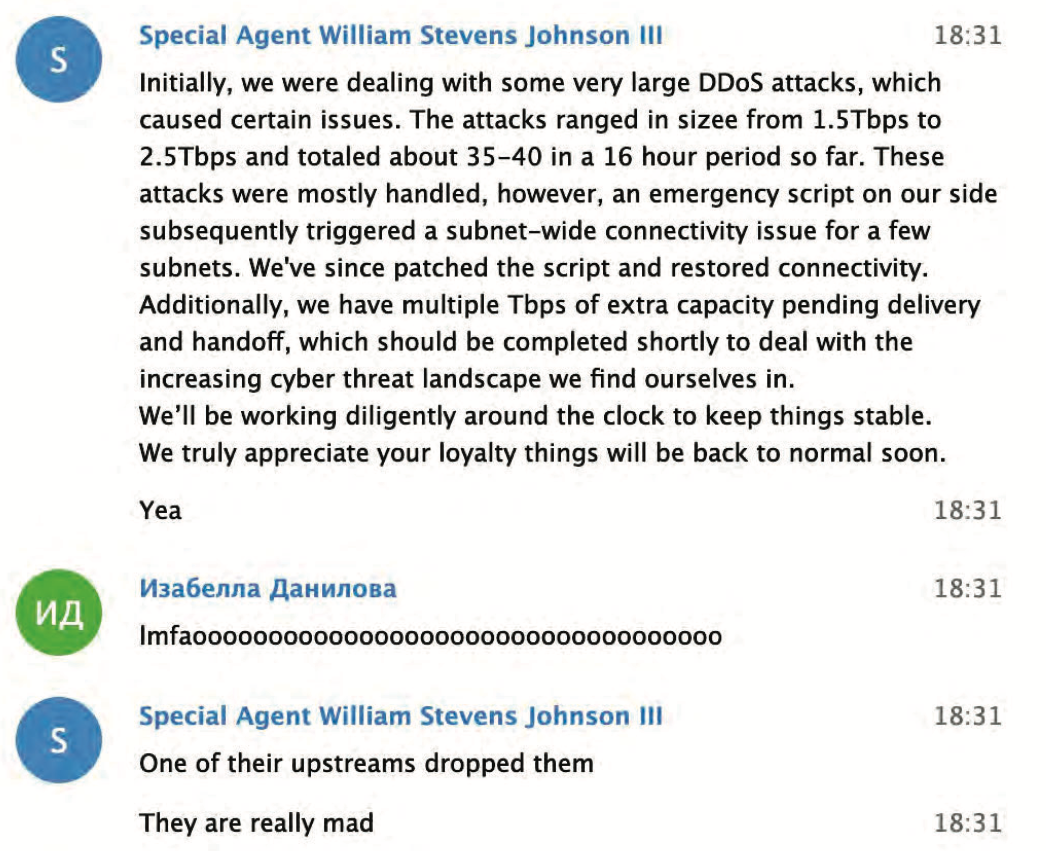

On Aug. 17, GlobalSolutions posted to BlackHatWorld saying,”We’re restructuring our business model by downgrading to ‘DSL only’ lines (no mobile or cable).” Asked via email about the changes, DSLRoot blamed the decline in his customers on the proliferation of residential proxy services.

“These days it has become almost impossible to compete in this niche as everyone is selling residential proxies and many companies want you to install a piece of software on your phone or desktop so they can resell your residential IPs on a much larger scale,” DSLRoot explained. “So-called ‘legal botnets’ as we see them.”