—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

News

Ransomware Groups Prioritize Defense Evasion for Data Exfiltration

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

Russian Media Uses AI-Powered Software to Spread Disinformation

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

Most Security Pros Admit Shadow SaaS and AI Use

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

Microsoft Fixes Four Zero-Days in July Patch Tuesday

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

Sophos ZTNA now supports on-premise Microsoft AD

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

RADIUS Vulnerability

New attack against the RADIUS authentication protocol:

The Blast-RADIUS attack allows a man-in-the-middle attacker between the RADIUS client and server to forge a valid protocol accept message in response to a failed authentication request. This forgery could give the attacker access to network devices and services without the attacker guessing or brute forcing passwords or shared secrets. The attacker does not learn user credentials.

This is one of those vulnerabilities that comes with a cool name, its own website, and a logo.

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

Olympics Has Fallen – A Misinformation Campaign Featuring Elon Musk

Authored by Lakshya Mathur and Abhishek Karnik

As we gear up for the 2024 Paris Olympics, excitement is building, and so is the potential for scams. From fake ticket sales to counterfeit merchandise, scammers are on the prowl, leveraging big events to trick unsuspecting fans. Recently, McAfee researchers uncovered a particularly malicious scam that not only aims to deceive but also to portray the International Olympic Committee (IOC) as corrupt.

This scam involves sophisticated social engineering techniques, where the scammers aim to deceive. They’ve become more accessible than ever thanks to advancements in Artificial Intelligence (AI). Tools like audio cloning enable scammers to create convincing fake audio messages at a low cost. These technologies were highlighted in McAfee’s AI Impersonator report last year, showcasing the growing threat of such tech in the hands of fraudsters.

The latest scheme involves a fictitious Amazon Prime series titled “Olympics has Fallen II: The End of Thomas Bach,” narrated by a deepfake version of Elon Musk’s voice. This fake series was reported to have been released on a Telegram channel on June 24th, 2024. It’s a stark reminder of the lengths to which scammers will go to spread misinformation and exploit public figures to create believable narratives.

As we approach the Olympic Games, it’s crucial to stay vigilant and question the authenticity of sensational claims, especially those found on less regulated platforms like Telegram. Always verify information through official channels to avoid falling victim to these sophisticated scams.

Cover Image of the series

This series seems to be the work of the same creator who, a year ago, put out a similar short series titled “Olympics has Fallen,” falsely presented as a Netflix series featuring a deepfake voice of Tom Cruise. With the Olympics less than a month away, this new release looks to be a sequel to last year’s fabrication.

Image and Description of last year’s released series

These so-called documentaries are currently being distributed via Telegram channels. The primary aim of this series is to target the Olympics and discredit its leadership. Within just a week of its release, the series has already attracted over 150,000 viewers, and the numbers continue to climb.

In addition to claiming to be an Amazon Prime story, the creators of this content have also circulated images of what seem to be fabricated endorsements and reviews from reputable publishers, enhancing their attempt at social engineering.

Fake endorsement of famous publishers

This 3-part series consists of episodes utilizing AI voice cloning, image diffusion and lip-sync to piece together a fake narration. A lot of effort has been expended to make the video look like a professionally created series. However, there are certain hints in the video, such as the picture-in-picture overlay that appears at various points in the series. Through close observation, there are certain glitches.

Overlay video within the series with some discrepancies

The original video appears to be from a Wall Street Journal (WSJ) interview that has then been altered and modified (notice the background). The audio clone is almost indiscernible by human inspection.

Original video snapshot from WSJ Interview

Modified and altered screenshot from part 3 of the fake series

Episodes thumbnails and their descriptions captured from the telegram channel

Elon Musk’s voice has been a target for impersonation before. In fact, McAfee’s 2023 Hacker Celebrity Hot List placed him at number six, highlighting his status as one of the most frequently mimicked public figures in cryptocurrency scams.

As the prevalence of deepfakes and related scams continues to grow, along with campaigns of misinformation and disinformation, McAfee has developed deepfake audio detection technology. Showcased on Intel’s AI PCs at RSA in May, McAfee’s Deepfake Detector – formerly known as Project Mockingbird – helps people discern truth from fiction and defends consumers against cybercriminals utilizing fabricated, AI-generated audio to carry out scams that rob people of money and personal information, enable cyberbullying, and manipulate the public image of prominent figures.

With the 2024 Olympics on the horizon, McAfee predicts a surge in scams involving AI tools. Whether you’re planning to travel to the summer Olympics or just following the excitement from home, it’s crucial to remain alert. Be wary of unsolicited text messages offering deals, steer clear of unfamiliar websites, and be skeptical of the information shared on various social platforms. It’s important to maintain a critical eye and use tools that enhance your online safety.

McAfee is committed to empowering consumers to make informed decisions by providing tools that identify AI-generated content and raising awareness about their application where necessary.

AI-generated content is becoming increasingly believable nowadays. Some key recommendations while viewing content online:

- Be skeptical of content from untrusted sources – Always question the motive. In this case, the content is accessible on Telegram channels and posted to uncommon public cloud storage.

- Be vigilant while viewing the content – Most AI fabrications will have some flaws, although it’s becoming increasingly more difficult to spot such discrepancies at a glance. In this video, we noted some obvious indicators that appeared to be forged, however it is slightly more complicated with the audio.

- Cross-verify information – Any cross-validation of this content based on the title on popular search engines or by searching Amazon Prime content, would very quickly lead consumers to realize that something is amiss.

The post Olympics Has Fallen – A Misinformation Campaign Featuring Elon Musk appeared first on McAfee Blog.

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

What Is Generative AI and How Does It Work?

It’s all anyone can talk about. In classrooms, boardrooms, on the nightly news, and around the dinner table, artificial intelligence (AI) is dominating conversations. With the passion everyone is debating, celebrating, and villainizing AI, you’d think it was a completely new technology; however, AI has been around in various forms for decades. Only now is it accessible to everyday people like you and me.

The most famous of these mainstream AI tools are ChatGPT, DALL-E, and Bard, among others. The specific technology that links these tools is called generative artificial intelligence. Sometimes shortened to gen AI, you’re likely to have heard this term in the same sentence as deepfake, AI art, and ChatGPT. But how does the technology work?

Here’s a simple explanation of how generative AI powers many of today’s famous (or infamous) AI tools.

What Is Generative AI?

Generative AI is the specific type of artificial intelligence that powers many of the AI tools available today in the pockets of the public. The “G” in ChatGPT stands for generative. Today’s Gen AI’s evolved from the use of chatbots in the 1960s. Now, as AI and related technologies like deep learning and machine learning have evolved, generative AI can answer prompts and create text, art, videos, and even simulate convincing human voices.

How Does Generative AI Work?

Think of generative AI as a sponge that desperately wants to delight the users who ask it questions.

First, a gen AI model begins with a massive information deposit. Gen AI can soak up huge amounts of data. For instance, ChatGPT is trained on 300 billion words and hundreds of megabytes worth of facts. The AI will remember every piece of information that is fed into it. Additionally, it will use those nuggets of knowledge to inform any answer it spits out.

From there, a generative adversarial network (GAN) algorithm constantly competes with itself within the gen AI model. This means that the AI will try to outdo itself to produce an answer it believes is the most accurate. The more information and queries it answers, the “smarter” the AI becomes.

Google’s content generation tool, Bard is a great way to illustrate generative AI in action. Bard is based on gen AI and large language models. It’s trained in all types of literature and when asked to write a short story, it does so by finding language patterns and composing by choosing words that most often follow the one preceding it. In a 60 Minutes segment, Bard composed an eloquent short story that nearly brought the presenter to tears, but its composition was an exercise in patterns, not a display of understanding human emotions. So, while the technology is certainly smart, it’s not exactly creative.

How to Use Generative AI Responsibly

The major debates surrounding generative AI usually deal with how to use gen AI-powered tools for good. For instance, ChatGPT can be an excellent outlining partner if you’re writing an essay or completing a task at work; however, it’s irresponsible and is considered cheating if a student or an employee submits ChatGPT-written content word for word as their own work. If you do decide to use ChatGPT, it’s best to be transparent that it helped you with your assignment. Cite it as a source and make sure to double-check your work!

One lawyer got in serious trouble when he trusted ChatGPT to write an entire brief and then didn’t take the time to edit its output. It turns out that much of the content was incorrect and cited sources that didn’t exist. This is a phenomenon known as an AI hallucination, meaning the program fabricated a response instead of admitting that it didn’t know the answer to the prompt.

Deepfake and voice simulation technology supported by generative AI are other applications that people must use responsibly and with transparency. Deepfake and AI voices are gaining popularity in viral videos and on social media. Posters use the technology in funny skits poking fun at celebrities, politicians, and other public figures. However, to avoid confusing the public and possibly spurring fake news reports, these comedians have a responsibility to add a disclaimer that the real person was not involved in the skit. Fake news reports can spread with the speed and ferocity of wildfire.

The widespread use of generative AI doesn’t necessarily mean the internet is a less authentic or a riskier place. It just means that people must use sound judgment and hone their radar for identifying malicious AI-generated content. Generative AI is an incredible technology. When used responsibly, it can add great color, humor, or a different perspective to written, visual, and audio content.

The post What Is Generative AI and How Does It Work? appeared first on McAfee Blog.

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

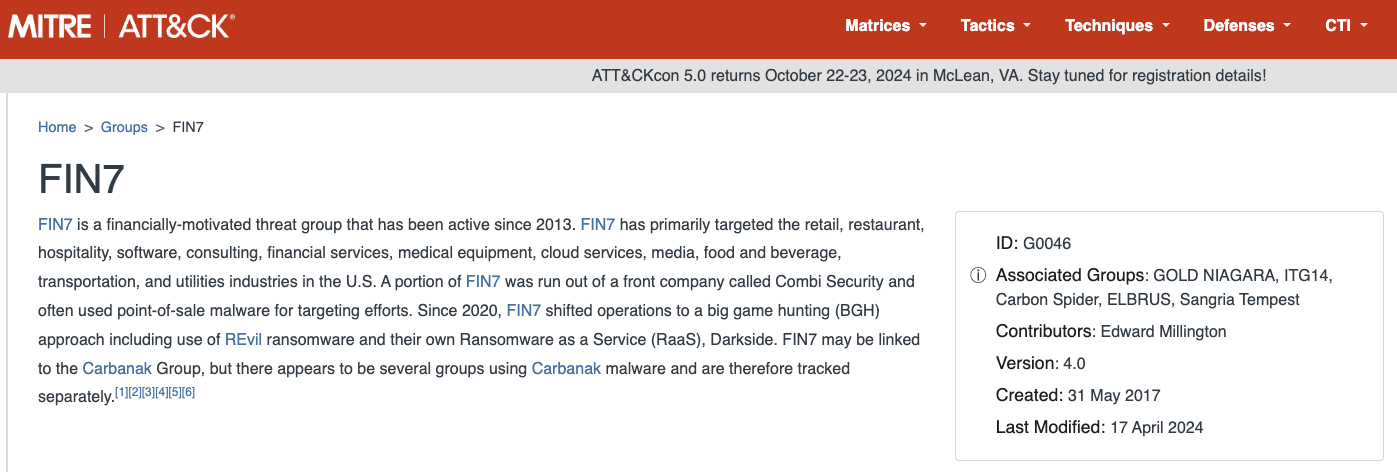

The Stark Truth Behind the Resurgence of Russia’s Fin7

The Russia-based cybercrime group dubbed “Fin7,” known for phishing and malware attacks that have cost victim organizations an estimated $3 billion in losses since 2013, was declared dead last year by U.S. authorities. But experts say Fin7 has roared back to life in 2024 — setting up thousands of websites mimicking a range of media and technology companies — with the help of Stark Industries Solutions, a sprawling hosting provider that is a persistent source of cyberattacks against enemies of Russia.

In May 2023, the U.S. attorney for Washington state declared “Fin7 is an entity no more,” after prosecutors secured convictions and prison sentences against three men found to be high-level Fin7 hackers or managers. This was a bold declaration against a group that the U.S. Department of Justice described as a criminal enterprise with more than 70 people organized into distinct business units and teams.

The first signs of Fin7’s revival came in April 2024, when Blackberry wrote about an intrusion at a large automotive firm that began with malware served by a typosquatting attack targeting people searching for a popular free network scanning tool.

Now, researchers at security firm Silent Push say they have devised a way to map out Fin7’s rapidly regrowing cybercrime infrastructure, which includes more than 4,000 hosts that employ a range of exploits, from typosquatting and booby-trapped ads to malicious browser extensions and spearphishing domains.

Silent Push said it found Fin7 domains targeting or spoofing brands including American Express, Affinity Energy, Airtable, Alliant, Android Developer, Asana, Bitwarden, Bloomberg, Cisco (Webex), CNN, Costco, Dropbox, Grammarly, Google, Goto.com, Harvard, Lexis Nexis, Meta, Microsoft 365, Midjourney, Netflix, Paycor, Quickbooks, Quicken, Reuters, Regions Bank Onepass, RuPay, SAP (Ariba), Trezor, Twitter/X, Wall Street Journal, Westlaw, and Zoom, among others.

Zach Edwards, senior threat analyst at Silent Push, said many of the Fin7 domains are innocuous-looking websites for generic businesses that sometimes include text from default website templates (the content on these sites often has nothing to do with the entity’s stated business or mission).

Edwards said Fin7 does this to “age” the domains and to give them a positive or at least benign reputation before they’re eventually converted for use in hosting brand-specific phishing pages.

“It took them six to nine months to ramp up, but ever since January of this year they have been humming, building a giant phishing infrastructure and aging domains,” Edwards said of the cybercrime group.

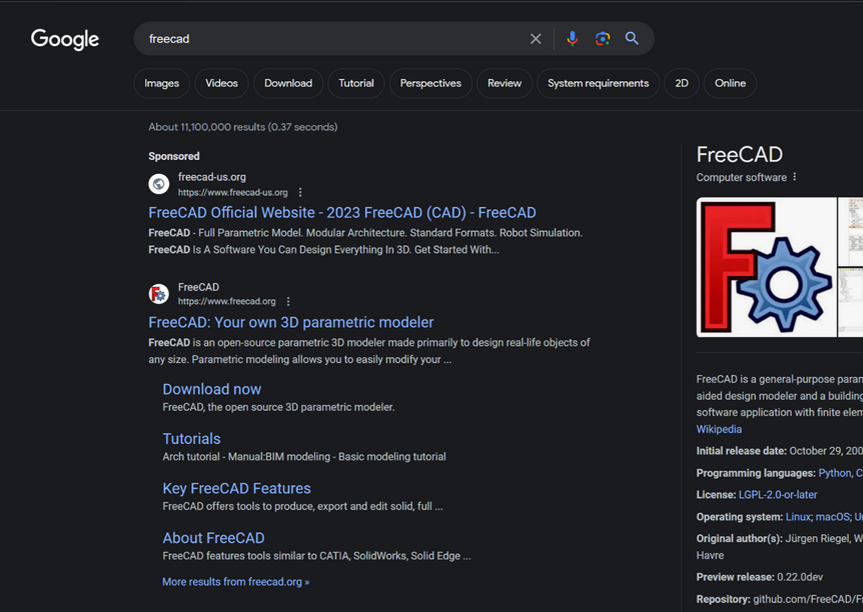

In typosquatting attacks, Fin7 registers domains that are similar to those for popular free software tools. Those look-alike domains are then advertised on Google so that sponsored links to them show up prominently in search results, which is usually above the legitimate source of the software in question.

A malicious site spoofing FreeCAD showed up prominently as a sponsored result in Google search results earlier this year.

According to Silent Push, the software currently being targeted by Fin7 includes 7-zip, PuTTY, ProtectedPDFViewer, AIMP, Notepad++, Advanced IP Scanner, AnyDesk, pgAdmin, AutoDesk, Bitwarden, Rest Proxy, Python, Sublime Text, and Node.js.

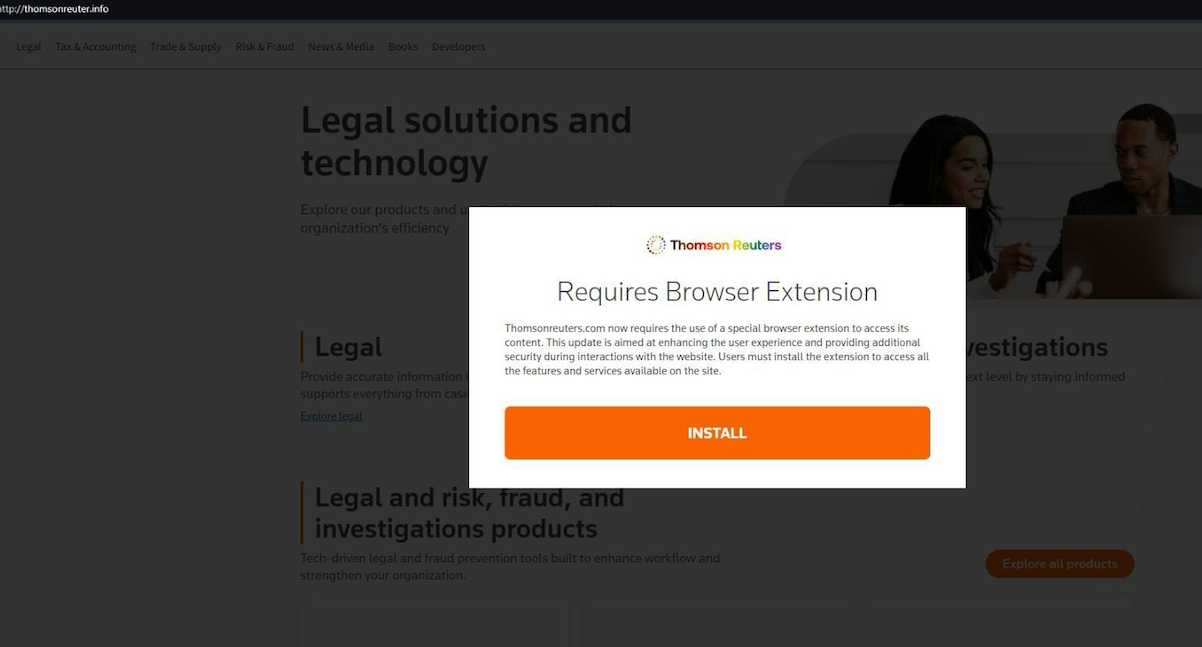

In May 2024, security firm eSentire warned that Fin7 was spotted using sponsored Google ads to serve pop-ups prompting people to download phony browser extensions that install malware. Malwarebytes blogged about a similar campaign in April, but did not attribute the activity to any particular group.

A pop-up at a Thomson Reuters typosquatting domain telling visitors they need to install a browser extension to view the news content.

Edwards said Silent Push discovered the new Fin7 domains after a hearing from an organization that was targeted by Fin7 in years past and suspected the group was once again active. Searching for hosts that matched Fin7’s known profile revealed just one active site. But Edwards said that one site pointed to many other Fin7 properties at Stark Industries Solutions, a large hosting provider that materialized just two weeks before Russia invaded Ukraine.

As KrebsOnSecurity wrote in May, Stark Industries Solutions is being used as a staging ground for wave after wave of cyberattacks against Ukraine that have been tied to Russian military and intelligence agencies.

“FIN7 rents a large amount of dedicated IP on Stark Industries,” Edwards said. “Our analysts have discovered numerous Stark Industries IPs that are solely dedicated to hosting FIN7 infrastructure.”

Fin7 once famously operated behind fake cybersecurity companies — with names like Combi Security and Bastion Secure — which they used for hiring security experts to aid in ransomware attacks. One of the new Fin7 domains identified by Silent Push is cybercloudsec[.]com, which promises to “grow your business with our IT, cyber security and cloud solutions.”

The fake Fin7 security firm Cybercloudsec.

Like other phishing groups, Fin7 seizes on current events, and at the moment it is targeting tourists visiting France for the Summer Olympics later this month. Among the new Fin7 domains Silent Push found are several sites phishing people seeking tickets at the Louvre.

“We believe this research makes it clear that Fin7 is back and scaling up quickly,” Edwards said. “It’s our hope that the law enforcement community takes notice of this and puts Fin7 back on their radar for additional enforcement actions, and that quite a few of our competitors will be able to take this pool and expand into all or a good chunk of their infrastructure.”

Further reading:

Stark Industries Solutions: An Iron Hammer in the Cloud.

A 2022 deep dive on Fin7 from the Swiss threat intelligence firm Prodaft (PDF).

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains