Countless smartphones seized in arrests and searches by police forces across the United States are being auctioned online without first having the data on them erased, a practice that can lead to crime victims being re-victimized, a new study found. In response, the largest online marketplace for items seized in U.S. law enforcement investigations says it now ensures that all phones sold through its platform will be data-wiped prior to auction.

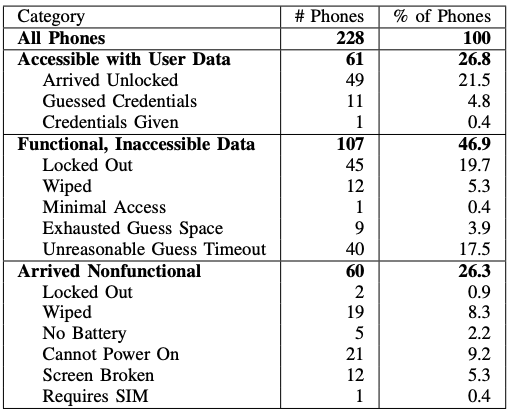

Researchers at the University of Maryland last year purchased 228 smartphones sold “as-is” from PropertyRoom.com, which bills itself as the largest auction house for police departments in the United States. Of phones they won at auction (at an average of $18 per phone), the researchers found 49 had no PIN or passcode; they were able to guess an additional 11 of the PINs by using the top-40 most popular PIN or swipe patterns.

Phones may end up in police custody for any number of reasons — such as its owner was involved in identity theft — and in these cases the phone itself was used as a tool to commit the crime.

“We initially expected that police would never auction these phones, as they would enable the buyer to recommit the same crimes as the previous owner,” the researchers explained in a paper released this month. “Unfortunately, that expectation has proven false in practice.”

The researchers said while they could have employed more aggressive technological measures to work out more of the PINs for the remaining phones they bought, they concluded based on the sample that a great many of the devices they won at auction had probably not been data-wiped and were protected only by a PIN.

Beyond what you would expect from unwiped second hand phones — every text message, picture, email, browser history, location history, etc. — the 61 phones they were able to access also contained significant amounts of data pertaining to crime — including victims’ data — the researchers found.

Some readers may be wondering at this point, “Why should we care about what happens to a criminal’s phone?” First off, it’s not entirely clear how these phones ended up for sale on PropertyRoom.

“Some folks are like, ‘Yeah, whatever, these are criminal phones,’ but are they?” said Dave Levin, an assistant professor of computer science at University of Maryland.

“We started looking at state laws around what they’re supposed to do with lost or stolen property, and we found that most of it ends up going the same route as civil asset forfeiture,” Levin continued. “Meaning, if they can’t find out who owns something, it eventually becomes the property of the state and gets shipped out to these resellers.”

Also, the researchers found that many of the phones clearly had personal information on them regarding previous or intended targets of crime: A dozen of the phones had photographs of government-issued IDs. Three of those were on phones that apparently belonged to sex workers; their phones contained communications with clients.

An overview of the phone functionality and data accessibility for phones purchased by the researchers.

One phone had full credit files for eight different people on it. On another device they found a screenshot including 11 stolen credit cards that were apparently purchased from an online carding shop. On yet another, the former owner had apparently been active in a Telegram group chat that sold tutorials on how to run identity theft scams.

The most interesting phone from the batches they bought at auction was one with a sticky note attached that included the device’s PIN and the notation “Gry Keyed,” no doubt a reference to the Graykey software that is often used by law enforcement agencies to brute-force a mobile device PIN.

“That one had the PIN on the back,” Levin said. “The message chain on that phone had 24 Experian and TransUnion credit histories”.

The University of Maryland team said they took care in their research not to further the victimization of people whose information was on the devices they purchased from PropertyRoom.com. That involved ensuring that none of the devices could connect to the Internet when powered on, and scanning all images on the devices against known hashes for child sexual abuse material.

It is common to find phones and other electronics for sale on auction platforms like eBay that have not been wiped of sensitive data, but in those cases eBay doesn’t possess the items being sold. In contrast, platforms like PropertyRoom obtain devices and resell them at auction directly.

PropertyRoom did not respond to multiple requests for comment. But the researchers said sometime in the past few months PropertyRoom began posting a notice stating that all mobile devices would be wiped of their data before being sold at auction.

“We informed them of our research in October 2022, and they responded that they would review our findings internally,” Levin said. “They stopped selling them for a while, but then it slowly came back, and then we made sure we won every auction. And all of the ones we got from that were indeed wiped, except there were four devices that had external SD [storage] cards in them that weren’t wiped.”

A copy of the University of Maryland study is here (PDF).

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains