Leading off our news on scams this week, a heads-up for DoorDash users, merchants, and Dashers too. A data breach of an undisclosed size may have impacted you.

Per an email sent by the company to “affected DoorDash users where required,” a third party gained access to data that may have included a mix of the following:

- First and last name

- Physical address

- Phone number

- Email address

You might have got the email too. And even if you didn’t, anyone who’s used DoorDash should take note.

As to the potential scope of the breach, DoorDash made no comment in its email or a post on their help site. Of note, though, is that one of the help lines cited in their post mentions a French-language number—implying that the breach might affect Canadian users as well. Any reach beyond the U.S. and Canada remains unclear.

Per the company’s Q2 financial report this year, “hundreds of thousands of merchants, tens of millions of consumers, and millions of Dashers across over 30 countries every month.” Stats published elsewhere put the user base at more than 40 million people, which includes some 600,000 merchants.

The company underscored that no “sensitive” info like Social Security Numbers (and potentially Canadian Social Insurance Numbers) were involved in the breach. This marks the third notable breach by the well-known delivery service, with incidents in 2019 and 2022

What to do if you think you got caught up in the DoorDash breach

While the types of info involved here appear to be limited, any time there’s a breach, we suggest the following:

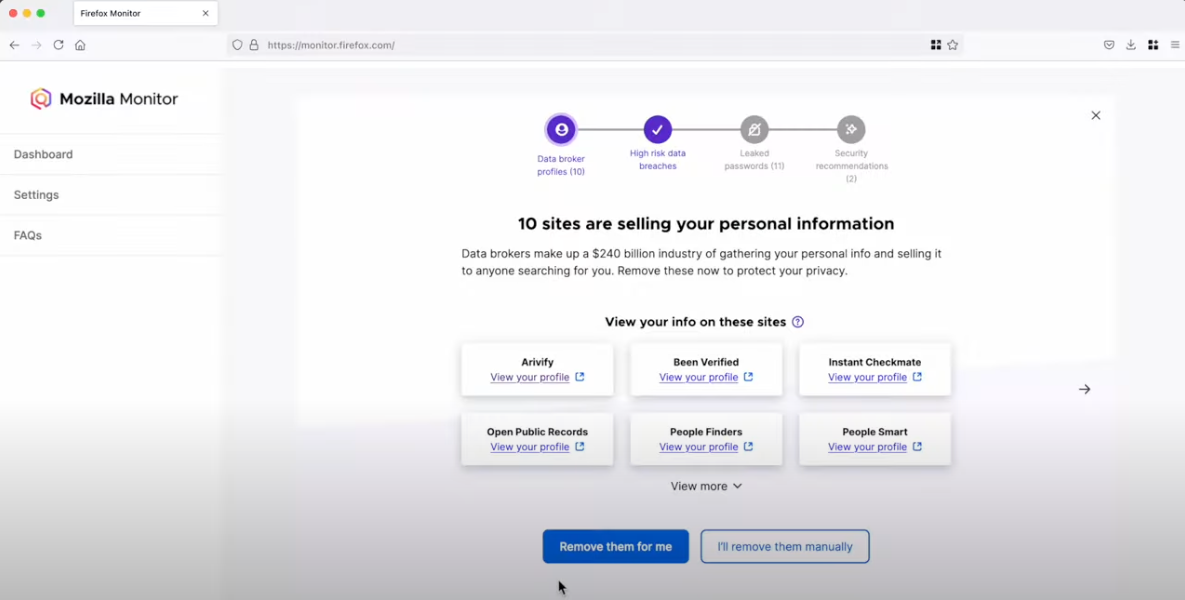

Protect your credit and identity. Checking your credit and getting identity theft protection can help keep you safer in the aftermath of a breach. Further, a security freeze can help prevent identity theft if you spot any unusual activity. You can get all three in place with our McAfee+ Advanced or Ultimate plans.

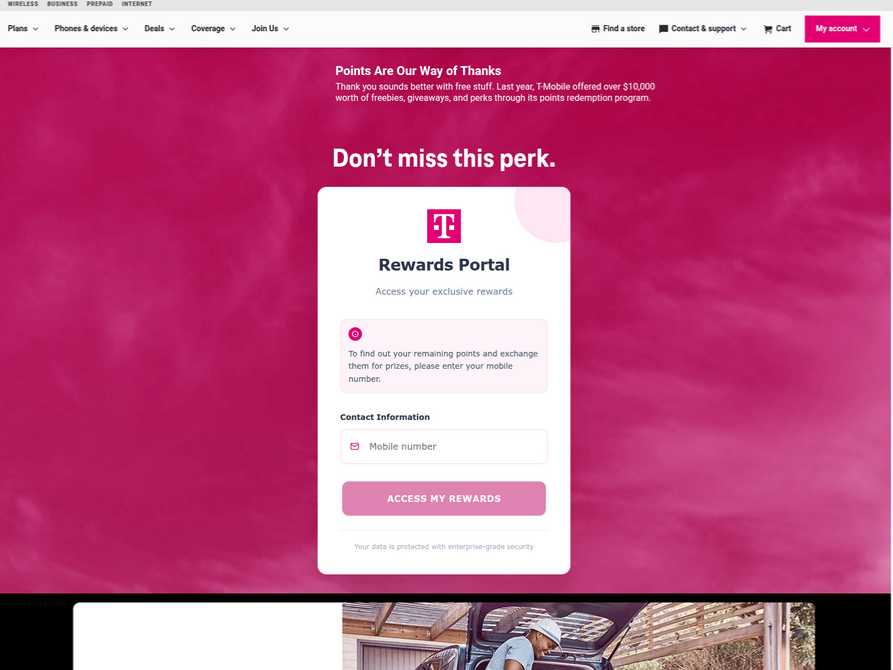

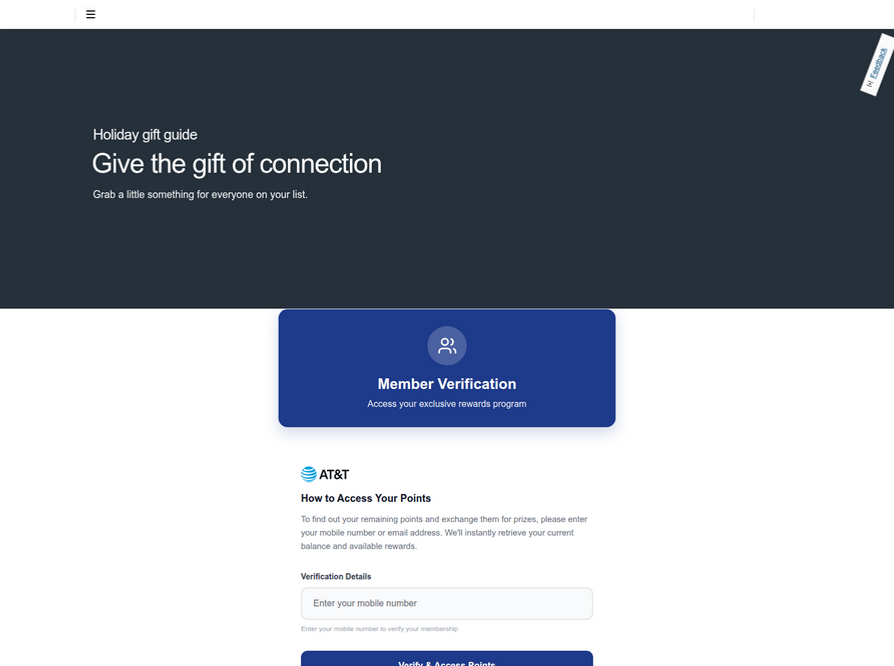

Keep an eye out for phishing attacks. With some personal info in hand, bad actors might seek out more. They might follow up a breach with rounds of phishing attacks that direct you to bogus sites designed to steal your personal info. As with any text or email you get from a company, make sure it’s legitimate before clicking or tapping on any links. Instead, go straight to the appropriate website or contact them by phone directly. Also, protections like our Scam Detector and Web Protection can alert you to scams and sketchy links before they take you somewhere you don’t want to go.

Update your passwords and use two-factor authentication. Changing your password is a strong preventive measure. Strong and unique passwords are best, which means never reusing your passwords across different sites and platforms. Using a password manager helps you stay on top of it all while also storing your passwords securely.

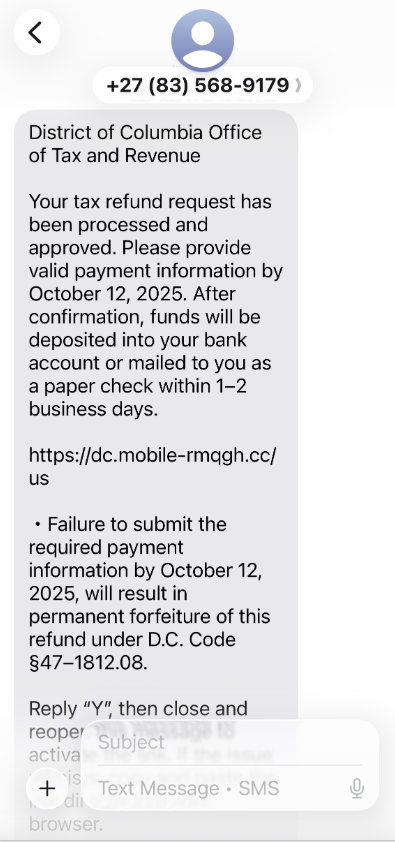

Attention travelers: Now boarding, a rise in flight cancellation scams

Even as the FAA lifted recent flight restrictions on Monday morning, scammers are still taking advantage of lingering uncertainty, and upcoming holiday travel, with a spate of flight cancellation scams.

How the scam works

Fake cancellation texts

The first comes via a text message saying that your flight has been cancelled and you must call or rebook quickly to avoid losing your seat—usually in 30 minutes. It’s a typical scammer trick, where they hook you with a combination of bad news and urgency. Of course, the phone number and the site don’t connect you with your airline. They connect you to a scammer, who walks away with your money and your card info to potentially rip you off again.

Fake airline sites in search results

The second uses paid search results. We’ve talked about this trick in our blogs before. Because paid search results appear ahead of organic results, scammers spin up bogus sites that mirror legitimate ones and promote them in paid search. In this way, they can look like a certain well-known airline and appear in search before the real airline’s listing. With that, people often mistakenly click the first link they see. From there, the scam plays out just as above as the scammer comes away with your money and card info.

How to avoid flight cancellation scams

Q: How can I confirm whether my flight is really canceled?

A: Check directly in your airline’s official app or website. Never click links in texts or emails.

Q: How can I spot a fake airline search result?

A: Look for “Ad”/“Sponsored,” confirm the URL, and check that the site uses HTTPS, not HTTP.

Q: Is there a tool that flags fake booking sites?

A: Scam-spotting tools like Scam Detector and Web Protection can identify sketchy links before you click.

In search, first isn’t always best.

Look closely to see if your top results are tagged with “Sponsored” or “Ad” in some way, realizing it might be in fine print. Further, look at the web address. Does it start with “https” (the “s” means secure), because many scam sites simply use an unsecured “http” site. Also, does the link look right? For example, if you’re searching for “Generic Airlines,” is the link the expected “genericairlines dot-com” or something else? Scammers often try to spoof it in some way by adding to the name or by creating a subdomain like this: “genericairlines.rebookyourflight dot-com.”

Get a scam detector to spot bogus links for you.

Even with these tips and tools, spotting bogus links with the naked eye can get tricky. Some look “close enough” to a legitimate link that you might overlook it. Yet a combination of features in our McAfee+ plans can help do that work for you. Our Scam Detector helps you stay safer with advanced scam detection technology built to spot and stop scams across text messages, emails, and videos. Likewise, our Web Protection will alert you if a link might take you to a sketchy site. It’ll also block those sites if you accidentally tap or click on a bad link.

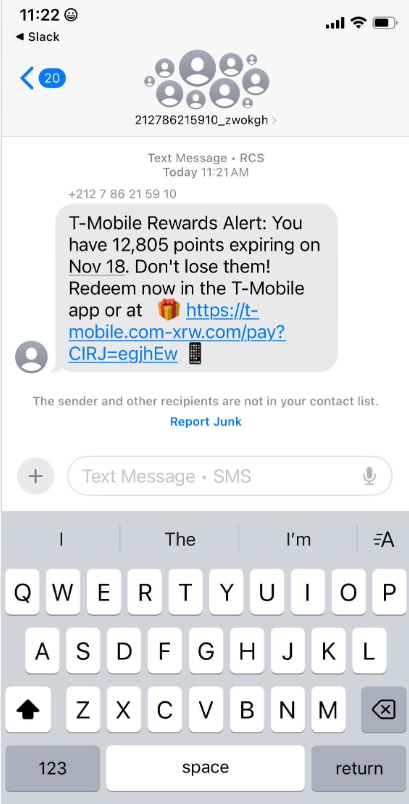

Scammers Hijack a Trusted Mass Texting Provider

You’ve probably seen plenty of messages sent by short code numbers. They’re the five- or six-digit codes used to send texts instead of by a phone number. For example, your cable company might use one to send a text for resetting a streaming password, the same goes for your pharmacy to let you know a prescription is ready or your state’s DoT to issue a winter travel alert, and so on.

According to NBC News, scammers sent hundreds of thousands of texts using codes used by the state of New York, a charity, and a political organizing group. The article also cites an email sent to messaging providers by the U.S. Short Code Registry, an industry nonprofit that maintains those codes in the U.S. In the email, the registry said attempted attacks on messaging providers are on the rise.

What this means for the rest of us is that just about any text from an unknown number, and now short codes, might contain malicious links and content. It’s one more reason to arm yourself with the one-two punch of our Scam Detector and Web Protection.

What are short codes?

Short codes are 5–6 digit numbers used by pharmacies, utilities, banks, and government agencies to send official alerts.

Why this attack is unusual

Scammers didn’t spoof short codes—they gained access to real ones used by:

- The State of New York

- A charity

- A political organizing group

Why this matters

Even texts from legitimate short-code numbers can no longer be trusted at face value.

What to do now

- Treat any unexpected text—even from a short code—as suspicious.

- Don’t tap links.

- Verify by going directly to the official website or app.

Quick Scam Roundup

Consumers warned over AI chatbots giving inaccurate financial advice

- Our advice: Always verify recommendations with trusted financial sources

Why our own clicks are often cybercrime’s greatest allies

- Our advice: Many attacks rely on rushed or emotional decisions, slow down before clicking

TikTok malware scam uses fake software activation guides to steal data

- Our advice: Download software only from official sources

We’ll be back after the Thanksgiving weekend with more updates, scam news, and ways to stay cyber safe.

The post This Week in Scams: DoorDash Breach and Fake Flight Cancellation Texts appeared first on McAfee Blog.

—————

Free Secure Email – Transcom Sigma

Boost Inflight Internet

Transcom Hosting

Transcom Premium Domains

“Follow” button

“Follow” button